Tesla’s “Elon Mode” Under Fire: Unpacking the Hidden Feature That Could Reshape the Future of Driver Safety and Autonomy

An undocumented feature, informally known as Elon Mode, has put Tesla high-tech driver-assistance systems into a very bright spotlight, where they are under the direct examination of federal safety regulators. This understated aspect, which is said to be able to bypass safety alerts that are meant to keep the driver on the alert, has sparked a deep controversy on the limits of autonomous technology and the role of the driver. Since the National Highway Traffic Safety Administration, or NHTSA, requires unprecedented data of the automotive giant, the consequences of this revelation might radically alter the future of the vehicle autonomy. It is not a technical glitch; it is a turning point in the continuation of the story of innovation versus oversight.

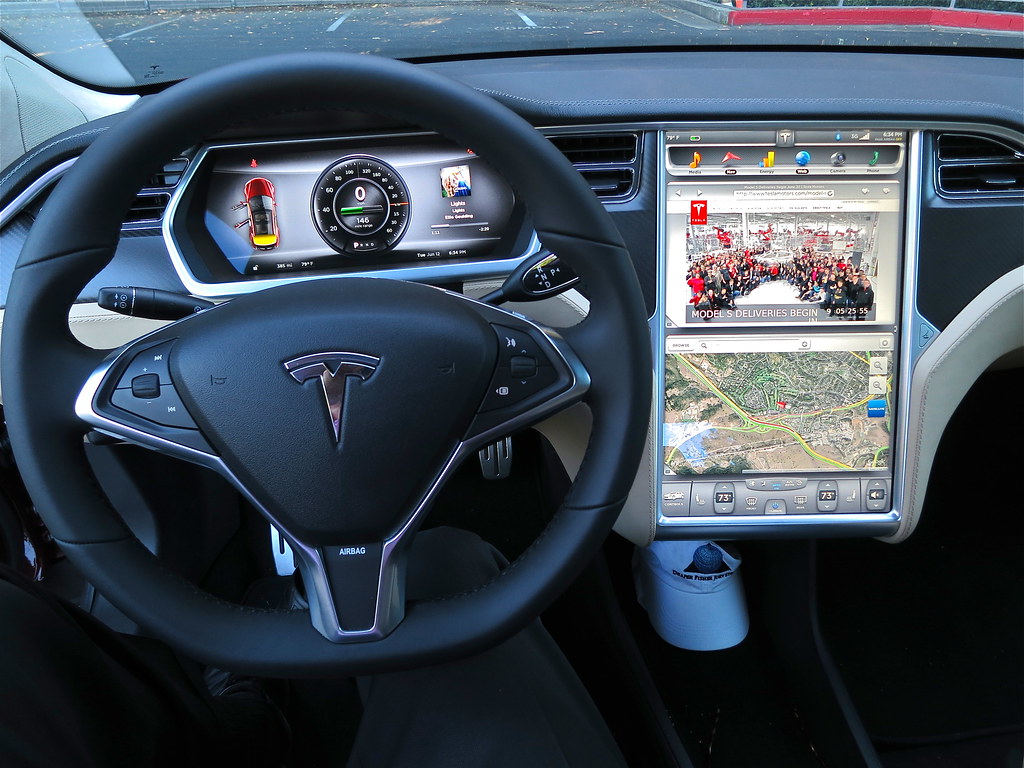

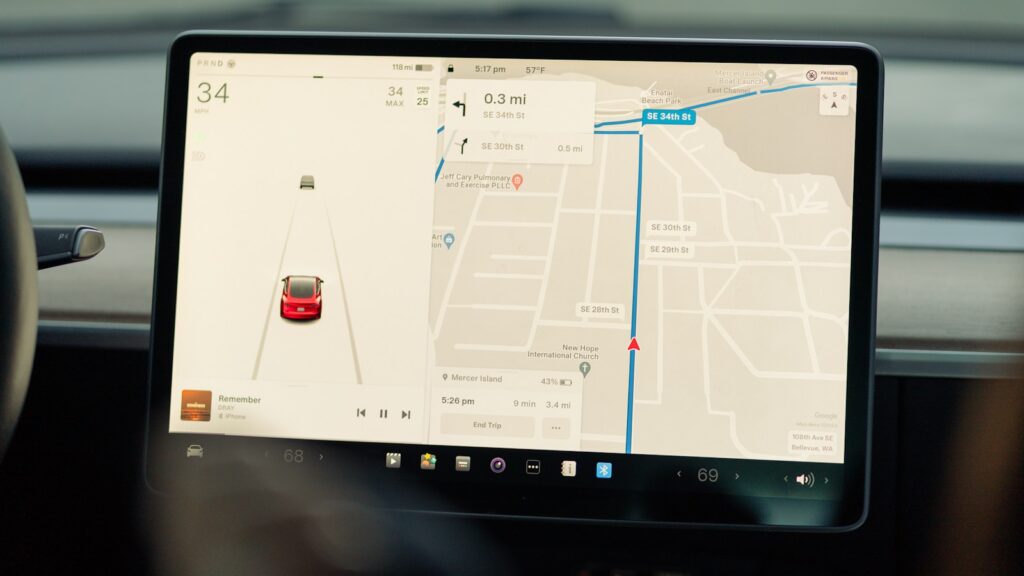

Fundamentally, Elon Mode is a radical departure of the usual working procedures of Tesla autopilot and Full Self-Driving (FSD) options. Typically, these systems use a sequence of progressive warnings, beginning with visual indicators on the touchscreen to a beep, to make sure that drivers are in constant physical contact with the steering wheel. In case a driver continues to overlook these warnings, the system will temporarily shut down the advanced driver assistance system, which imposes a critical layer of human control. The unearthed, however, so-called Elon Mode is said to remove this vital “nag” and thus enables the extended hands-free operation without the usual safety warnings, which practically bypasses the very mechanisms that are designed to ensure that the driver is not disengaged.

The presence of this mysterious environment was initially revealed as a result of the research of a security researcher who was working under the name of @GreentheOnly. This person, who has been testing the features of Tesla vehicles over years and has been reporting bugs, disclosed the secret configuration that could be unlocked by Tesla, which would enable one to use it in extended hands-free mode. The identity of the researcher is known but pseudonymous due to privacy, calling himself Elon Mode, but it is not the internal name of Tesla. This discovery was earlier reported by the Verge in June and made more public.

After these revelations, NHTSA promptly intensified its anxieties, sending a final letter and special order to Tesla on July 26. This order was not a single incident but a part of the larger ongoing investigation by NHTSA about the incidents of Tesla vehicles colliding with emergency vehicles. The main concern of the agency revolved around an Autopilot system that allowed drivers to drive their vehicles over long distances without the Autopilot reminding the driver to exert some form of torque on the steering wheel. The order by NHTSA was very detailed, requiring details on the number of cars and drivers Tesla had approved to use this mode, the access mechanisms, and the reason why it was included in consumer vehicles, and any history of crashes or near-misses.

The Investigation by NHTSA and Tesla Response (Confidential)

The seriousness of the situation was enhanced by direct words of senior officials in the regulatory authority. The acting chief counsel of NHTSA expressed the deep concern of the agency and said, NHTSA is worried about the safety implications of the recent modifications to the Tesla driver monitoring system. He stressed that this issue was based on the information available that it could be possible that vehicle owners could alter the driver monitoring settings of Autopilot to enable the driver to drive the vehicle in Autopilot over longer distances without Autopilot reminding the driver to apply torque to the steering wheel. Donaldson made a solemn threat: The resultant loosening of controls to assure that the driver is actively engaged in the dynamic driving task may cause increased driver inattention and driver inability to adequately monitor Autopilot.

Tesla filed its response to NHTSA by the required deadline of August 25, under the federal directive, and asked and was granted confidential treatment. This secrecy prevents the details of its explanation to be exposed to the eyes of the public and most of the questions remain unanswered by both the public and the media. Although several questions have been raised by leading news sources, such as CNBC, Insider, and CNN, Tesla has notably avoided making any official statement regarding “Elon Mode” or the order issued by the NHTSA, which is in dramatic contrast to the very clear directives contained in the company manual. The manual explicitly explains that with Autopilot, the driver must ensure that his hands are on the steering wheel at all times which is a basic principle of road safety that seems to be compromised by the reported features of the so-called Elon Mode.

Public Actions and Safety Community Reactions of Musk

Adding to the regulatory discomfort are the activities and previous comments made by CEO Elon Musk which seem to contradict the rigorous driver engagement policies. In December of last year, Musk tweeted about a software update that would enable some Tesla drivers to disable the nag prompts, which has not been fully executed. In April 2023, he stated that he had a gradual decrease in the proportion of nag to better safety. Most recently, in a very high-profile livestream earlier this month, Musk was caught on camera driving a Tesla in Palo Alto, California, actively using a phone, which is not only against the company’s own rules but also against the law. In this FSD software demonstration, the audience observed that Musk did not always have his hands on the steering yoke, which caused Greg Lindsay, an Urban Tech fellow at Cornell, to mention that the whole drive was a red flag waving in front of NHTSA.

The automotive safety fraternity has come out with a resounding opinion on the ethical and safety concerns of such a feature, with professionals being highly critical of the same. Philip Koopman, an automotive safety researcher and associate professor of computer science at the Carnegie Mellon University, demanded that any hidden safety vulnerabilities should not exist in software development. He claimed that NHTSA has a dark opinion of cheat codes that allow the disabling of safety systems like driver monitoring, and directly said, “Hidden features that compromise safety do not belong in production software. Bruno Bowden, a machine learning expert, noted in a livestream by Musk that the Tesla system almost ran a red light, and had to be saved by Musk who was able to brake in time to prevent any danger, which further emphasizes the limitations of the system at the moment.

False Language and Reported Safety Problems

One of the main ethical and regulatory criticisms revolves around the same language that Tesla uses to refer to its systems. Such terms as Autopilot and Full Self-Driving already imply some degree of autonomy, which the technology, which is defined as SAE Level 2 driver-assistance, clearly lacks. This Level 2 designation is very clear that it needs full human control at all times, unlike actual fully self-driving (SAE Level 4 or 5) vehicles, which are not yet on the market. Consumer advocacy groups claim that this kind of branding is misleading and creates a false sense of safety and makes drivers abuse the system, thus putting dangerous situations. This mood was well put by Clean Technica Steve Hanley who said that autopilot has always been a potentially deceptive term that is writing checks it cannot cash.

These issues are not just theoretical; they are based on a documented history of events and regulatory measures. In 2025, the Autopilot of Tesla had already been associated with more than 1,000 reported incidents, some of which were fatal, even in crashes with parked emergency vehicles. NHTSA has initiated various inquiries into these events, and in February, Tesla voluntarily recalled 362,758 vehicles, saying that its Full Self-Driving Beta system can lead to crashes, as it permits unsafe actions at intersections. In December 2023, a more recent recall was issued on more than two million cars because of the risk of Autosteer misuse, highlighting the continuing difficulty in making sure that drivers are engaged and that the systems are safe.

Data Collection, Privacy Issues, and Liability

The innovative data collection method is undoubtedly one of the factors that contribute to the rapid technological development of Tesla. All cars on the road are complex, moving data points, constantly sending driving behavior, environmental information and incident reports back to the company. Such large volumes of real-world data enable Tesla to refine and optimize its software at an unprecedented rate, which leads to additional innovation. Nevertheless, such a massive amount of data collection also raises serious concerns about the issue of privacy because drivers do not always know how much data is being collected and stored. This brings a controversial question: Does the surveillance pay off in the safety benefits, and who is the ultimate owner of this precious driving information Tesla or the driver himself?

Making these ethical issues even more difficult is the vexed question of accountability and liability in cases of accidents involving semi-autonomous systems. In the case of a partially self-driving car, it is a complicated legal and ethical issue to establish fault. Is it the fault of the driver who has the legal responsibility to be alert and to be ready to take control or is the blame shared with Tesla who created and sold the underlying technology? The historic strategy of Tesla to be aggressive in innovations, and to respond to regulators, has often caused tension, which has led to recurrent conflicts with legislators and safety authorities around the world. These are the thorny questions that courts around the world are struggling with, pushing the limits of liability to the extreme in the era of highly developed driver-assistance systems.

Long-Term Autonomy Vision and Current Problems of Tesla

Finally, the scandal of the Elon Mode and the constant regulatory investigation are only significant chapters of a much bigger story that Elon Musk envisions. His vision is much larger than what Autopilot can do now, but in the future, he hopes to have ubiquitous, fully autonomous driving, where robotaxis are used in place of human drivers and Tesla cars can earn money when their owners are not driving. This big dream is however hanging on a thin thread depending on how the company will manage to survive the current nightmare of lawsuits, constant regulatory challenges and increasing consumer distrust. Every miscalculation, every emerging scandal, risks to subvert the basis of this long-term, transformational objective.

With federal regulators shedding more and more layers of the Tesla driver assistance systems, the appearance of the so-called Elon Mode is a powerful reminder of the fragility of technological ambition and the imperative of safety that cannot be compromised. This developing drama summarizes the high stakes that the automotive industry is undergoing as it moves deeper into the self-driving capabilities field. The issues evoked by this unseen aspect go far beyond Tesla and reach the core of trust, responsibility, and the responsible use of artificial intelligence in our lives. The mobility of the future requires not only innovation but also care and a commitment to the welfare of the people that is uncompromising. The future of the road, as well as the technology itself, is under active development.