Can You Truly Nap While Your Car Drives Itself? Unpacking the Complexities of Autonomous Vehicle Safety and Legality

The idea of dozing off while your car smoothly handles busy roads has always fascinated people. Not just a dream from old sci-fi movies anymore, driverless vehicles have made this feel real and urgent today. With smart sensors plus AI now doing much of the driving, one big concern pops up for daily riders and road trippers both: can you actually relax safely or even legally while the machine takes over?

This isn’t just about ease it touches on how people and machines are changing their bond, shaking up old ideas about who’s in charge, who’s accountable, or whom we rely on. Although less tired drivers and safer streets sound great thanks to cutting out human mistakes, today’s self-driving tech plus the messy patchwork of laws show a situation way more complex than it first seems. Moving from sci-fi fantasy to regular use involves tough tech problems, confusing rules, along with big changes in what folks believe.

To really get if you can snooze in your car someday, let’s break down what self-driving means checking out the different stages of automation while looking at today’s laws around driving behavior. We’ll look at current tech limits, point out where innovation stands versus legal rules, yet also start unpacking why this time feels like a turning point for how we move around.

1. Understanding Autonomy Levels: The NHTSA Framework

To start talking about napping in a driverless vehicle, you first need to get what “self-driving” actually involves. People throw around the phrase like it’s one thing, but out there right now, it covers many different levels of automation more variety than most assume.

Looking deeper into what drives each level of automation:

- Automation levels show what kind of help a person behind the wheel actually gets.

- Figuring out these differences can stop serious mix-ups.

- Every stage draws a line for what people must answer for.

- Misreading what a system can do might result in risky choices while driving.

These categories aren’t only about tech they form the base for today’s safety rules along with legal duties. Starting from tools that help drivers up to ones trying full self-operation, every stage brings different demands on the person inside. Ignoring such differences might cause big misunderstandings regarding what a car can actually do or how safe it really is.

Knowing if a car drives itself a little, sometimes, mostly, or completely matters otherwise talking about napping behind the wheel is just guessing, maybe even risky. Instead of assumptions, rely on the NHTSA system it spells out exactly what the vehicle handles, where it falls short, and when you’ve still got to pay attention. Skip this step? You’re lost before starting, especially when wondering if lounging while driving is actually possible today.

2. Level 2 & 3: Why You Can’t Sleep Now (The Legal & Practical Imperative)

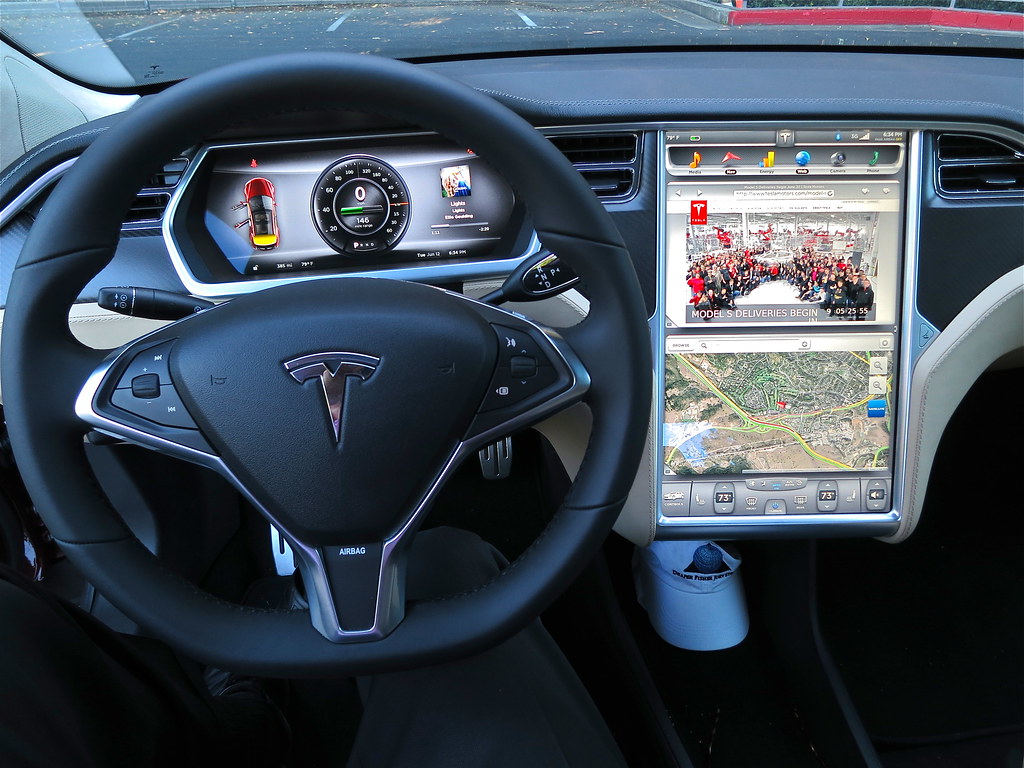

Most so-called “self-driving” cars you can buy in 2025 rank at Level 2 or 3 on the NHTSA’s scale. Even though they do a lot, these models still need someone behind the wheel no naps allowed. Level 2 called partial automation is seen in tech such as Tesla’s Autopilot or GM’s Super Cruise; it manages steering along with speed yet needs the driver watching closely. Despite that help, the person behind the wheel is still on the hook legally, no matter what.

Knowing today’s limits on semi-independence:

- People still need to pay attention, no matter how much a car drives itself.

- Level 2 or level 3 setups need drivers watching all the time.

- Legal liability stays with the person, not the machine, during these phases so the driver still holds the blame if things go wrong.

- Sleeping breaks rules set by the system, also goes against road regulations.

Moving a bit higher, Level 3 called ‘Conditional automation’ lets the vehicle handle many driving duties in certain situations yet can unexpectedly ask for help from the driver. So, you’ve got to stay focused, ready to jump in without delay. Here’s the bottom line: the tech isn’t perfect, which leaves you as the last line of defense. Because of that need to respond fast, dozing off isn’t just risky it breaks the rules built into how the system works.

The actual results of skipping these rules can be really bad just look at what’s already happened. Some drivers got hit with reckless driving claims after dozing off or not watching their semi-self-driving cars closely enough. One popular clip from 2023 showed a guy sleeping inside a Tesla on a busy highway in Massachusetts; he later faced legal trouble. Each case shows something clear: fancy car tech doesn’t mean you can zone out or let go completely.

In short, calling Level 2 or 3 cars “self-driving” isn’t quite accurate Tesla’s autopilot help section stresses this, pointing out that even with tools like Autopilot or Full Self-Driving, the vehicle doesn’t drive itself. Instead, it needs a focused driver keeping hands on the wheel, ready to jump in anytime. Bottom line: you’ve got to stay awake when using these systems; dozing off while driving one? Never an option.

3. The Promise of Levels 4 & 5: When Napping Becomes a Possibility

The real chance to nap while driving starts showing up once we look at more advanced self-driving cars like Level 4 or even Level 5. These categories mark a big step forward in what machines can do, changing how people fit into the process. At level 4 called high automation cars manage every part of driving but only in certain spots, like set regions, freeways, or urban zones; no person needs to take over while it’s running there.

Looking into what comes next for totally self-reliant transport:

- Level 4 cars drive themselves, but only where they’ve been programmed to go so far.

- People might not need to step in where machines handle tasks.

- Level 5 means the machine runs on its own no help needed from people.

- Being allowed to snooze hinges not just on rules being okay with it, but also how ready the tech really is.

In a Level 4 car working in its set area, dozing off shifts from totally forbidden to kind of possible depends mostly on local rules. It runs itself fine even if the person inside isn’t paying attention. That’s when calling someone a “driver” feels less right they’re acting like a regular rider now. As long as things stay within normal conditions, how people act inside can change completely.

At Level 5 called full self-driving the car handles every road, any situation, without help; no steering wheel needed. You’d probably catch some sleep inside, assuming rules allow it. With zero need for human control, the car becomes your moving lounge or office, where chilling out or getting work done just makes sense.

Getting a clear picture matters these stages aren’t ready yet, mostly stuck in early tests or limited trials before reaching regular users. Even though progress moves fast, going from Level 3 to common Level 4 and 5 setups means tackling tough tech hurdles, laws, and public trust issues. Being able to nap while driving depends completely on how well high-level automation evolves and gains people’s confidence a dreamy idea right now, more hope than reality.

4. The Patchwork of US Laws: State-by-State Variations

A big roadblock to clear rules about napping in autonomous vehicles across America? The patchy mix of state laws. While some places have strict codes, others barely touch the topic. Because regulations aren’t lined up, confusion pops up fast. Even if tech lets you snooze behind the wheel, local rules might say nope. So, progress crawls held back by mismatched policies from one region to another. Right now, no uniform national rule clearly allows sleeping in a Level 4 self-driving car much less less advanced models. Rather, different states follow inconsistent rules on driverless cars; quite a few haven’t adjusted their laws for advanced automation at all.

Finding your way through changing laws in various places:

- State rules on self-driving cars vary a lot from place to place.

- A few states bother with what occupants do. Others skip it completely.

- Drivers should know regional laws before trusting self-driving features.

- Moving across state lines might change what’s allowed on the spot rules flip fast without warning.

This inconsistency shows that something allowed or just unclear in one place might outright break rules somewhere else. When taking a self-driving car across states, you’ve got to dig deep into each area’s regulations, which is tough work most won’t bother with. Laws haven’t caught up because tech moves faster than government can respond.

Across 50 states, self-driving cars are permitted in one way or another yet just 29 have actual rules for them; meanwhile, under five spell out what riders can legally do while inside. Because of this mismatch, drivers end up stuck figuring things out on their own, even when info’s hard to find. Catching some shut eye could still land you in trouble unless your state says otherwise.

For now, taking a relaxed nap behind the wheel depends mostly on where you are and how well you know the rules nearby, since national guidelines haven’t caught up yet. Because laws keep shifting from place to place, people pushing for change must keep pressing lawmakers; tech moves fast, but safety rules need to make sense for everyone without confusion.

5. California’s Strict Stance: Reckless Driving and Liability

California tests lots of driverless cars, so some folks see it leading the pack when it comes to robot vehicles. Still, if you’re thinking about zoning out or catching some sleep behind the wheel, California’s rules are among the toughest around. That’s because current laws stress that drivers must stay in control and keep an eye on things even when tech helps steer or brake.

Knowing the strict rules under California law:

- California insists on active human supervision constantly.

- Sleeping while driving counts as dangerous conduct.

- Blame for crashes stays fully on the person behind the wheel no one else chips in.

- Technology can’t go beyond what the state says is safe.

Driving without caring about safety counts as reckless under California’s rules specifically section 23103. Things like dozing off while behind the wheel fall into this category, even if your car has high-tech help like Tesla’s Autopilot. The main idea? If you’re not actually using your hands or paying attention to driving, you’re breaking the law.

This tough legal setup shows that in semi-self-driving cars, risky actions like dozing off can bring serious consequences, such as tickets or crashes leading to lawsuits. As Weinstein Win points out, payouts after collisions usually depend on if the person behind the wheel was paying attention and ready to take charge. If you let go of control by sleeping while driving in California, blame will likely fall entirely on you even if the car drives itself sometimes.

California’s stance sends a strong message to drivers elsewhere. Yet it shows fancy tech inside a car doesn’t free people from basic rules about safe driving. Right now, catching some sleep while riding there could land you in serious legal hot water. Also, it looks like ignoring well-known safety steps on purpose. So, the takeaway stands firm when behind the wheel, stay awake and fully aware.

6. Nevada’s Pioneering but Cautious Approach

Nevada stands out in self-driving car history it was the first state to allow them, way back in 2011. That early move could imply looser rules on driver attention; however, Nevada’s testing policies actually stress constant human awareness. For high-level automated vehicles, having someone onboard who can take control at any moment is still essential.

Self-driving car monitoring plus rules for safe operation:

- Nevada wants a trained person on-site whenever self-driving cars are tested someone ready to take control if needed. Rules say that driver must hold a valid license, just like any regular motorist. Testing can’t happen without this backup behind the wheel.

- A unique G-tag means drivers get trained just for watching over self-driving cars.

- Fault alarms have to warn people when they need to take back control.

- The state leans on care instead of hype during trial stages.

Because of AB 511 and later DMV rules starting March 1, 2012, tough rules kicked in for self-driving cars. A person with a valid license needs to be inside the car while tests run. That person also has to have a unique ‘G-endorsement’ a clear sign they’re trained for these experiments. Even though the state likes innovation, safety stays top priority.

Still, Nevada says self-driving test cars need alerts to warn the person behind the wheel when the tech messes up. That backup signal shows one thing clearly the human’s still in charge, even with fancy software running. Falling asleep while driving breaks those rules flat out, no matter how smart the car thinks it is.

Nevada’s rules, though forward-thinking, match the general view human attention still matters right now in self-driving car progress. Even if the state helped open doors for these vehicles, its careful approach shows shifting to totally hands-free driving, where napping could happen someday, moves slow because safety comes first, no matter how smart the tech gets.

7. Federal Guidelines: A Glimpse into Future Passenger Status

Even though states decide most rules for self-driving cars, the feds like the NHTSA are stepping in more now. By mid-2025, they’ve started introducing fresh guidance focused on Level 3 to Level 5 systems, hinting at a world where riders don’t need to drive at all.

Changing what drivers do in fully self-driving cars:

- People on level 4 or even 5 might ride along instead of being in control.

- Law changes could one day make it okay to nap or tune out.

- Federal rules don’t cancel out laws unique to each state.

- Folks need to stick to local rules till things catch on wider.

These rules point to a big change people in Level 4 or Level 5 cars might soon be seen less as drivers, more as riders. Because of this, dozing off could become legally possible, at least in theory, since folks wouldn’t need to stay alert behind the wheel. Instead of watching the road, they’d be free to tune out while the vehicle handles everything on its own. That’s because advanced self-driving tech can manage driving tasks without someone constantly stepping in.

Still, you’ve got to get this these federal tips. They’re just suggestions. Right now, Washington mostly lets each state handle how they’ll apply or enforce them. So even if the feds hint at where things might go, what really counts on the road depends on your state’s own laws. Just because it’s okay federally doesn’t mean your local cops will let it slide.

So even though NHTSA’s shifting advice hints at a day when napping in self-driving cars might be okay, people should still stay alert. If your state hasn’t passed laws allowing it in fully automated rides, falling asleep could get you into trouble. Rules from Washington lay groundwork yet local lawmakers hold the final say before snoozing on the road goes mainstream.

8. Public Sentiment and the Trust Deficit

Even though driverless cars are impressive tech, people still struggle to trust them especially when it comes to dozing off inside. Though progress moves fast and safety claims grow stronger, most folks remain uneasy handing over control to machines. That worry isn’t just talk it shows up clearly in solid poll results revealing widespread doubt.

Addressing public skepticism in autonomous vehicle adoption:

- Seven out of ten Americans wouldn’t trust a driverless car enough to sleep in it.

- Over 60% point to breakdowns in the setup as what worries them most.

- People need to feel sure before they let go. Trust makes it possible for riders to relax without risk.

- Trust in self-driving systems needs real-world proof, not just lab results.

A 2024 study from The Zebra shows nearly 8 out of 10 American grown-ups wouldn’t feel okay dozing off in a driverless car. When asked why, more than half 62 percent said they’re scared the tech might crash or quit mid-drive. Even though some see perks in automation, most still don’t trust it enough to let go fully; actually, relaxing inside one feels way too risky right now.

This space between what people think cars can do and how safe they actually feel points to a big hurdle for advanced self-driving models. Not just about making the tech work companies and rule-makers have got to help folks believe it won’t fail, no matter what. Without solid confidence taking root, drifting off during a drive will stay out of reach for nearly everyone, showing how much we cling to being in charge and knowing what comes next.

9. The Primal Need for Control: Why People Fear Disengagement

Besides worries over things breaking down, people’s main hang-up about dozing off in driverless cars comes from a deep-rooted urge they want to stay in charge. When questioned in the survey, most said straight up that not being able to take over is why they’d skip sleeping during rides. It’s not only about steering; it’s also fear of what might pop up around them while the car handles everything on its own.

People want control when cars drive themselves:

- Losing grip on what’s happening scares people most when dozing off in self-driving cars.

- Cars driven by people might struggle when mixed with self-driving ones.

- Folks usually trust old-school travel more it just seems less risky.

- Even smart computers still can’t handle the human urge to feel in control.

Fear grows when people admit they don’t trust fellow drivers. It shows folks get this tricky side of things trusting the robot car isn’t enough if everyone else drives wild. Knowing you’d sleep while software dodges unpredictable humans? That weakness feels risky, no matter how smart the machine acts.

This strong need for control becomes clearer when compared to buses or subways. People said they’d rather nap on a bus than in a driverless car more than 50% admitted that. Around 64 out of every 100 feel better riding trains, while nearly 67 prefer planes. That gap shows how trusted systems, like trains run by people with clear rules, offer reassurance tech-driven cars haven’t yet matched. Even if you’re just sitting along for the ride, knowing how things work helps more than guessing what an automated machine might do next.

10. The Cybersecurity Threat: Hacking and Its Implications

A big worry people have about self-driving cars especially when it comes to napping inside is getting hacked. As more gadgets link up online, each one might be at risk; so, the thought that someone could take control of your vehicle from afar feels scary. That fear shows folks understand how tech-heavy systems can break down in sneaky ways.

Keeping cyber safety at the core builds real confidence on its own:

- Fear about being hacked weakens trust in self-driving cars. Yet people still want safer rides without risks they can’t control.

- Possible threats might disrupt how traffic moves or slow down urgent help arriving.

- Firms should focus on strong online safeguards just like they do crash tests.

- Cyber safety matters if people are gonna trust sleeping while cars drive themselves.

The situation shows folks worry about self-driving cars being taken over by hackers. Though experts haven’t nailed down exactly how or how often this might happen, they agree it could go really wrong. It’s not just one car at risk whole systems could feel the effects. Say an attack hits hard; traffic in cities might grind to a halt, blocking key routes so even ambulances can’t get through

This danger shakes the basic feeling of safety a driver needs just to doze off. When hackers could take over the car’s brain from outside, the idea of safely sleeping during a ride starts falling apart. So, if companies want people to actually believe in these cars, they’ve got to prove their systems can’t be broken into showing that online protection matters just as much as seat belts or airbags. People need to know their data and lives are locked down tight.